INSTINCT challenge

Intelligence Advanced Research Projects Activity (IARPA)

Summary

The INSTINCT (Investigating Novel Statistical Techniques for Identifying Neural Correlates of Trustworthiness) Challenge called on members of the American public to develop innovative new algorithms that would help improve the prediction of individual trustworthiness. The solvers made use of research conducted by the IARPA teams during the first phase of the TRUST (Tools for Recognizing Useful Signals of Trustworthiness) program, which aimed to improve the understanding and assessment of trustworthiness under realistic conditions.

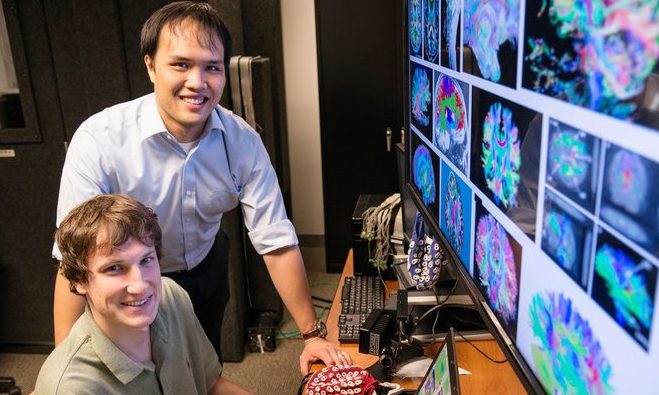

The winners of the IARPA Instinct Challenge pose next to brain imaging that helped choose algorithms for the competition. (Photo courtesy of BAE Systems)

The TRUST research resulted in large amounts of neural, physiological and behavioral data recorded in experiments in which volunteers decided whether or not to keep promises that they had made. IARPA suspected that this dataset might have untapped potential to predict trustworthiness in individuals. However, it was unclear which analytic techniques were appropriate to apply to the data.The INSTINCT Challenge sought solutions in the form of new feature selection methods and algorithms that would yield new insights into how to best analyze the TRUST data and lead to improved accuracy in recognizing who was trustworthy or not. In addition, as this was IARPA's first challenge, it sought to engage new people and communities with the organization's problem-solving opportunities and provide lessons for the development of future challenges.

IARPA worked with the Air Force Research Lab (AFRL) on the challenge through a Program Level Agreement (PLA). AFRL prepared the data set for the challenge and provided support based on its previous experience with challenges. The INSTINCT Challenge and data set were hosted on the InnoCentive prize platform.

Results

The challenge offered $25,000 for the top-performing algorithm that beat the performance of the baseline analysis. If other teams beat the baseline, $15,000 was offered as a second prize, and $10,000 was offered as a third prize.

One winner was chosen from 39 entries and awarded the $25,000 first prize. The winning team connected focused expertise with broader interdisciplinary interests in neuroscience, data and engineering. The winning solution, called JEDI MIND (Joint Estimation of Deception Intent via Multisource Integration of Neuropsychological Discriminators), improved on IARPA's baseline data analysis by 15 percent.

The challenge attracted considerable attention. IARPA continued to receive interest in working with the TRUST data following the close of the challenge. This has led to an exploration of new ways to make such data publicly available in the future. The challenge was also successful in engaging new communities by increasing awareness of the high-risk, high-reward research that falls under IARPA's mission.

Areas of Excellence

Area of Excellence #1: "Build a Team"

IARPA's internal team included the program manager and contract support for the TRUST program, out of which the challenge grew. The AFRL team had been involved with the program, providing independent test and evaluation. IARPA also invited government personnel involved with the creation and implementation of previous federal data analysis challenges to participate in an advisory steering committee. The committee provided input on lessons learned at the start of the INSTINCT design process, and continued to provide advice and feedback throughout development and implementation. The committee included members from NASA, the U.S. Agency for International Development, National Science Foundation, Defense Threat Reduction Agency and Defense Advanced Research Projects Agency.

Area of Excellence #2: "Define Evaluation and Judging Process"

Evaluation was objective and quantitative. Participants first created their algorithms using a training data set which included the outcomes-to-be-predicted. They then downloaded a test data set which did not include those outcomes and submitted their algorithms' outcome predictions. They could submit up to once per day throughout the open challenge period, with the 10 highest scores appearing on a leaderboard. At the end of the open period, solvers who had created algorithms with performance that exceeded the threshold could submit their full code with documentation to the challenge organizers. Solvers were aware that only those who submitted full code with documentation would be considered for a prize. AFRL then evaluated these algorithms using a third, withheld portion of the data, and performance against this data set was used to determine challenge winners.

In dividing the data set, AFRL sought to favor the training set first (40 percent of the full data), reserved evaluation set second (35 percent) and the leaderboard set last (25 percent). This weighting maximized the amount of data in the training set while retaining a sufficiently large reserved evaluation set to minimize the possibility that random chance would produce spuriously high accuracy for a particular solution. The percentage of the full data set used for each segment was chosen based on common practices in machine learning research; the percentages were weighted to favor the training and final validation sets in order to maximize training efficacy and validation accuracy.

Area of Excellence #3: "Identify Goal and Outcome Metrics"

The central goal of INSTINCT was to improve prediction of trustworthiness.

INSTINCT also had special visibility as IARPA's first challenge, and therefore sought to attract new potential partners and performers and help them become familiar with IARPA and IARPA's research opportunities. Outreach metrics helped measure how well it attained these goals, and also helped create lessons for future challenges.

Outreach and engagement goals were measured through extensive tracking of site visits, registrations, submissions and referral sites—IARPA's internal team made use of Google Analytics for these measures as well as reported figures from the challenge management company. These measures suggested that some outreach efforts exceeded expectations, while others underperformed. The largest number of visits came from IARPA's initial outreach push and ongoing Twitter posts, an e-newsletter ad, an agency-level Facebook post and the challenge management contractor's own outreach to its solver pool. A live presentation at a federal conference for algorithm developers also resulted in a registration surge. However, banner ads on a major scientific journal website received a relatively low click-through rate.

Area of Excellence #4: "Accept Solutions"

Submission requirements varied over the course of the challenge. The requirements were minimal for the leaderboard evaluation but increased for participants whose performance passed the initial threshold. Training data set data, including the outcomes-to-be-predicted, were provided for initial creation of algorithms. In order to evaluate the performance of these algorithms on test data (no outcomes included), IARPA required only CSV files (e.g., Excel files), showing the algorithm's outcome predictions, submitted up to once a day through a form on the challenge website. Feedback was provided via a publicly viewable leaderboard.

At the end of the open period, algorithms with performance that exceeded a threshold could be submitted as full code with documentation to the challenge organizers. These algorithms were then run by the challenge owners themselves against a third, evaluation portion of the data, and performance against this data set was used to determine challenge winners.

Area of Excellence #5: "Judge Submissions and Select Winners"

Predictive performance at both the test and evaluation stages was calculated using d' (d-prime), a measure that takes into account both the ability to accurately predict an outcome of interest (here, trustworthy behavior) and the ability to avoid incorrectly indicating such an outcome when it is not present (i.e., false alarms). The minimum threshold for a prize was d' = .7. A d-prime value gives the number of standard deviations (in a unit normal distribution) separating two data distributions—in this case the distribution of signals associated with the "trustworthy" and "untrustworthy" outcomes. The goal of using d' is to equally weight performance with both trustworthy and untrustworthy predictions, preventing the appearance of superior performance due to biasing one type of prediction. This was vital as the base rate of trustworthy behavior was extremely high, and a simpler measure of overall accuracy would have given high ratings to algorithms that simply predicted trustworthiness for all trials.

The minimum performance threshold was set using a baseline solution representing current off-the-shelf techniques as a comparative benchmark. This solution was developed by the TRUST program's Test and Evaluation Team at AFRL. In addition to preparing the data sets for use by solvers, AFRL also created separate data sets for independent testing and used them to evaluate the solvers' submitted solutions.

In spite of the purely quantitative evaluation method, a number of judgment calls were required to implement it. For example, some algorithms (including the baseline classifier) were non-deterministic, running based on a random seed and creating different prediction sets for every run. For these, AFRL ran each solution 200 times; the median performance and 95-percent confidence interval were calculated from the resulting d' distribution. The median performance value was used as the estimate of solution accuracy for non-deterministic solutions, as it should be most representative of generalized accuracy with a new dataset. These guidelines were shared with solvers.

While the evaluation technique was effective, performance on the test data set during the open period was not strongly predictive of final performance. While eight algorithms passed the threshold on the test data, most over-fit their solutions to that specific data set, and only one maintained high performance during the evaluation period.

Challenge Type

Analytics

How do you know if you can trust someone? The INSTINCT Challenge asked members of the American public to develop algorithms that improved predictions of trustworthiness using neural, physiological and behavioral data recorded during experiments in which volunteers made high-stakes promises and chose whether or not to keep them. Answering this question accurately is essential for society in general—but particularly so in the Intelligence Community (IC), where knowing whom to trust is often vital.

Legal Authority

Procurement authority